Difference between revisions of "QLanners Week 3"

(added tex about image to describe them) |

(added to electronic journal for getting just the answers) |

||

| Line 51: | Line 51: | ||

Once the webpage command was perfected in the command terminal, we parsed through the links and identifiers in the returned HTML. By using the browser to look up all of the things included in the HTML of the returned page, we came up with the lists above, along with a description of each element. For this section, my partner [[User:Dbashour | Dina Bashour]] and I worked together in person to split up the work in order to maximize efficiency. | Once the webpage command was perfected in the command terminal, we parsed through the links and identifiers in the returned HTML. By using the browser to look up all of the things included in the HTML of the returned page, we came up with the lists above, along with a description of each element. For this section, my partner [[User:Dbashour | Dina Bashour]] and I worked together in person to split up the work in order to maximize efficiency. | ||

| + | |||

| + | To get just the answers out of the returned HTML text from the command, we referenced the material covered in the [[Dynamic Text Processing]] page. We utilized the deletion capability to delete all of the lines of text that came before and after the section of the HTML that contained our answers. We then used a more sophisticated syntax to delete any text that was in between the < and > symbols. This left just the text of the answers. | ||

Revision as of 17:25, 19 September 2017

Hack a Webpage

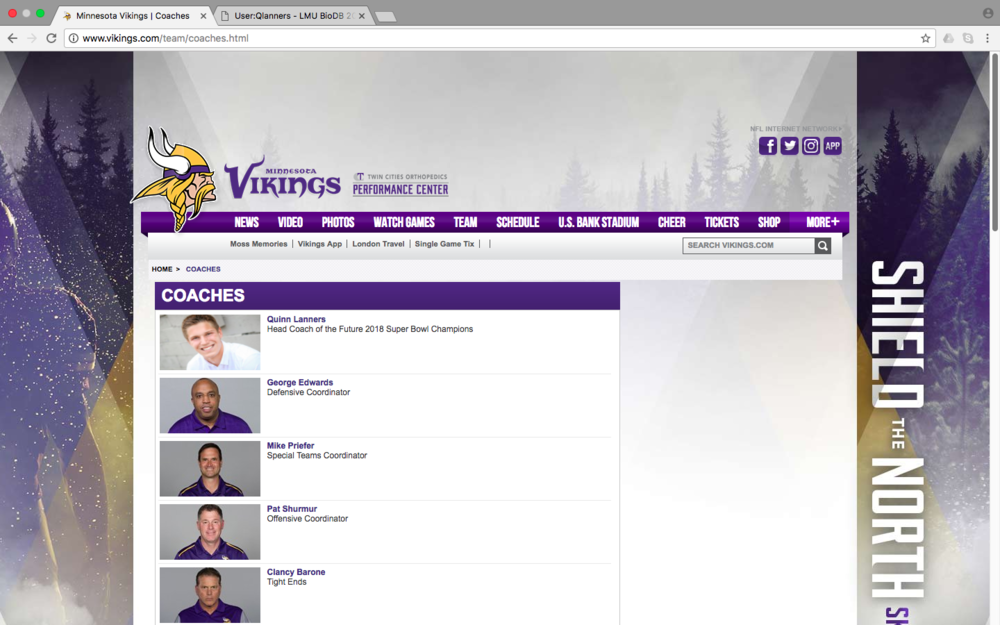

Image of Hacked Webpage without the develop tools showing. Changes done to add in image and text of the Head Coach of the Minnesota Vikings.

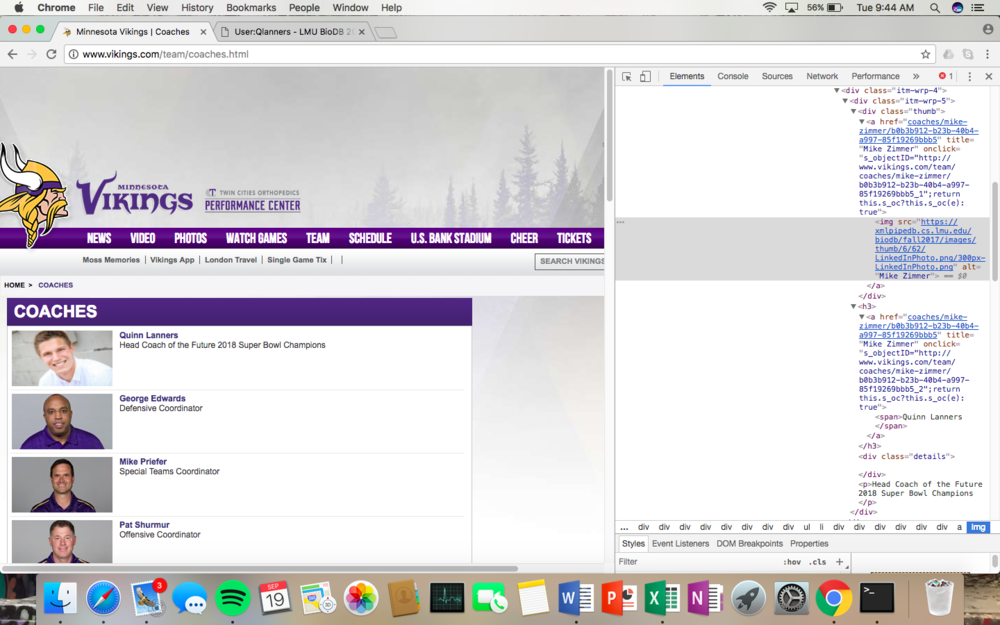

Image of Hacked Webpage with the develop tools showing. Changes done to add in image and text of the Head Coach of the Minnesota Vikings.

ExPASy translation server and curl command

Command used to retrieve info at a raw-data level:

curl -X POST -d "pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard&submit=Submit" http://web.expasy.org/cgi-bin/translate/dna_aa

ExPASy translation server output questions

Links for question 1:

- http://www.w3.org/TR/html4/loose.dtd : A HTML document "which includes presentation attributes and elements that W3C expects to phase out as support for style sheets matures"

- http://web.expasy.org/favicon.ico : A picture of the logo used on the page tab

- /css/sib_css/sib.css : A template laying out how the page should be formatted

- /css/sib_css/sib_print.css : A template laying out how the page should be formatted for printing

- /css/base.css : Another template for laying out the format of the page

- http://www.isb-sib.ch : Link to Swiss Institute of Bioinformatics Homepage

- http://www.expasy.org : Link to the ExPasy Bioinformatics Resource Portal Home

- http://web.expasy.org/translate : Link to the Translate Tool page (without any input in)

Identifiers for question 2:

- sib_top : The very top of the page

- sib_container : The container for the whole page returned

- sib_header_small : The small bar header at the top of the page

- sib_expasy_logo : The logo in the top left corner of the page

- resource_header : Not obvious, but possibly another formatting section for the header of the page

- sib_header_nav : The top right of the page with navigational buttons to home and contact

- sib_body : The portion of the page including the text and reading frames returned

- sib_footer : The footer at the bottom of the page

- sib_footer_content : The text/content included in the footer at the bottom of the page

- sib_footer_right : The text/content in the bottom right footer of the page

- sb_footer_gototop : The button going to the top of the page included in the footer

Command used to retrieve info at a just-the-info level:

curl -X POST -d "pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard&submit=Submit" http://web.expasy.org/cgi-bin/translate/dna_aa | sed "1,47d" | sed "13q" | sed "s/<[^>]*>//g"

Electronic Laboratory Notebook

In the second part of this weeks assignment, the pages Introduction to the Command Line, Dynamic Text Processing and The Web from the Command Line were utilized throughout the complete the tasks. In order to access the webpage, my partner and I utilized the format on the The Web from the Command Line page for how to post and submit data into parts of a webpage and return the HTML. By looking at the developer tools for the [1] page, we were able to determine the arguments that needed to be included for the command. We compared our returned HTML in the terminal with that in the return page's developer tools to ensure that our code was correct.

Once the webpage command was perfected in the command terminal, we parsed through the links and identifiers in the returned HTML. By using the browser to look up all of the things included in the HTML of the returned page, we came up with the lists above, along with a description of each element. For this section, my partner Dina Bashour and I worked together in person to split up the work in order to maximize efficiency.

To get just the answers out of the returned HTML text from the command, we referenced the material covered in the Dynamic Text Processing page. We utilized the deletion capability to delete all of the lines of text that came before and after the section of the HTML that contained our answers. We then used a more sophisticated syntax to delete any text that was in between the < and > symbols. This left just the text of the answers.