Difference between revisions of "Ebachour Week 3"

(Fixed Headings) |

(Finished..i think) |

||

| Line 2: | Line 2: | ||

==Hack-A-Page== | ==Hack-A-Page== | ||

[[File:EdwardBachoura_Week3Screenshot.PNG|1000px|Hacked Page]] | [[File:EdwardBachoura_Week3Screenshot.PNG|1000px|Hacked Page]] | ||

| + | |||

[[File:EdwardBachoura_Week3Screenshot_WithConsole.PNG|1000px|With Console]] | [[File:EdwardBachoura_Week3Screenshot_WithConsole.PNG|1000px|With Console]] | ||

| Line 10: | Line 11: | ||

==Using the Command Line to Extract Just the Answers== | ==Using the Command Line to Extract Just the Answers== | ||

Final curl code for answers: | Final curl code for answers: | ||

| − | + | curl -X POST -d "submit=Submit&pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard" http://web.expasy.org/cgi-bin/translate/dna_aa | grep -E '<(BR|PRE)>' | sed 's/<[^>]*>//g' | |

=Electronic Notebook= | =Electronic Notebook= | ||

| + | I used the LMU page out of laziness to find another one with easily accesible data. I used a website called upsidedowntext.com to flip all of the text on the page upside down. And in honor of It, I changed all the images to a picture of a movie ad for It. The first part of the curl assignment, once I understood what I was actually being asked to do, didn't take that long. I simply looked at the notes on the BioDB 2017 homepage and adpated some of the code examples. The next part took a lot of process of elimination. I got the first part (grep) pretty easily, but it took a lot of attempts to get the sed to properly clean the output to just the answers. | ||

=Acknowledgements= | =Acknowledgements= | ||

| Line 19: | Line 21: | ||

'''While I worked with the people noted above, this individual journal entry was completed by me and not copied from another source.''' <br> [[User:Ebachour|Ebachour]] ([[User talk:Ebachour|talk]]) 23:29, 19 September 2017 (PDT) | '''While I worked with the people noted above, this individual journal entry was completed by me and not copied from another source.''' <br> [[User:Ebachour|Ebachour]] ([[User talk:Ebachour|talk]]) 23:29, 19 September 2017 (PDT) | ||

| − | =References | + | =References= |

| − | LMU BioDB 2017. (2017). Week 3. Retrieved September 19, 2017, from https://xmlpipedb.cs.lmu.edu/biodb/fall2017/index.php/Week_3 | + | LMU BioDB 2017. (2017). Week 3. Retrieved September 19, 2017, from https://xmlpipedb.cs.lmu.edu/biodb/fall2017/index.php/Week_3 <br> |

| − | ExPASy - Translate tool. (2017). Retrieved September 19, 2017, from http://web.expasy.org/translate/ | + | ExPASy - Translate tool. (2017). Retrieved September 19, 2017, from http://web.expasy.org/translate/ <br> |

| + | StackOverflow. (2017). Retrieved September 19, 2017, from https://stackoverflow.com/questions/13096469/using-sed-delete-everything-between-two-characters | ||

{{Template:Ebachour}} | {{Template:Ebachour}} | ||

Revision as of 06:45, 20 September 2017

Contents

Edward Bachoura: Journal Week 3

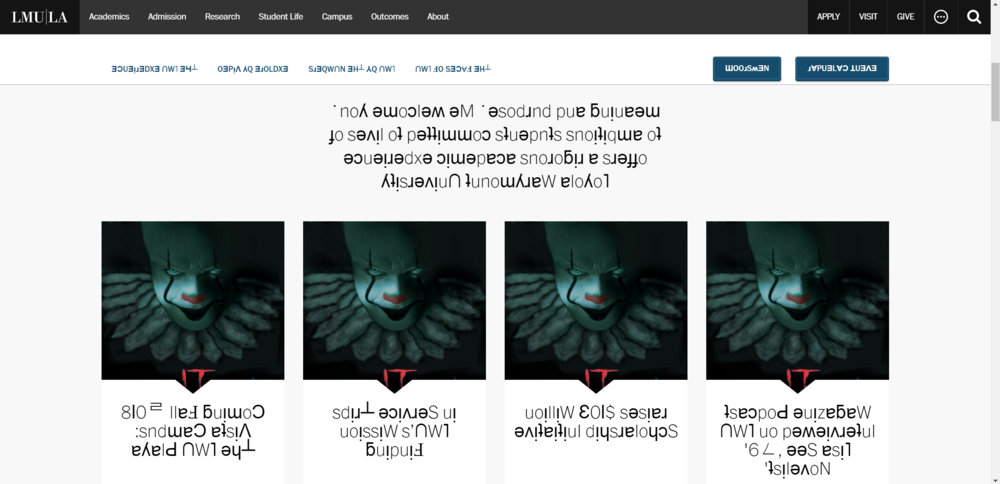

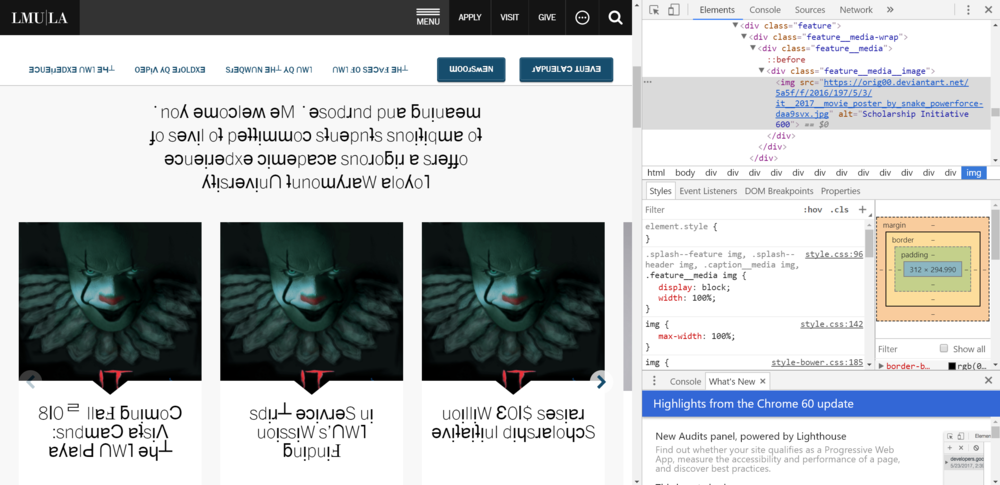

Hack-A-Page

"DM'ing" the Server with Curl

Final curl code:

curl -X POST -d "submit=Submit&pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard" http://web.expasy.org/cgi-bin/translate/dna_aa

Using the Command Line to Extract Just the Answers

Final curl code for answers:

curl -X POST -d "submit=Submit&pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard" http://web.expasy.org/cgi-bin/translate/dna_aa | grep -E '<(BR|PRE)>' | sed 's/<[^>]*>//g'

Electronic Notebook

I used the LMU page out of laziness to find another one with easily accesible data. I used a website called upsidedowntext.com to flip all of the text on the page upside down. And in honor of It, I changed all the images to a picture of a movie ad for It. The first part of the curl assignment, once I understood what I was actually being asked to do, didn't take that long. I simply looked at the notes on the BioDB 2017 homepage and adpated some of the code examples. The next part took a lot of process of elimination. I got the first part (grep) pretty easily, but it took a lot of attempts to get the sed to properly clean the output to just the answers.

Acknowledgements

Hayden Hinsch & I met in person and texted regarding the Week 3 Journal Assignment.

While I worked with the people noted above, this individual journal entry was completed by me and not copied from another source.

Ebachour (talk) 23:29, 19 September 2017 (PDT)

References

LMU BioDB 2017. (2017). Week 3. Retrieved September 19, 2017, from https://xmlpipedb.cs.lmu.edu/biodb/fall2017/index.php/Week_3

ExPASy - Translate tool. (2017). Retrieved September 19, 2017, from http://web.expasy.org/translate/

StackOverflow. (2017). Retrieved September 19, 2017, from https://stackoverflow.com/questions/13096469/using-sed-delete-everything-between-two-characters

Assignment Pages

- Week 1

- Week 2

- Week 3

- Week 4

- Week 5

- Week 6

- Week 7

- Week 8

- Week 9

- Week 10

- Week 11

- Week 12

- Week 14

- Week 15

Journal Entries

- Journal Week 2

- Journal Week 3

- Journal Week 4

- Journal Week 5

- Journal Week 6

- Journal Week 7

- Journal Week 8

- Journal Week 9

- Journal Week 10

- Journal Week 11

- Journal Week 12

- Journal Week 14

- Journal Week 15

Shared Journal Entries

- Shared Journal Week 1

- Shared Journal Week 2

- Shared Journal Week 3

- Shared Journal Week 4

- Shared Journal Week 5

- Shared Journal Week 6

- Shared Journal Week 7

- Shared Journal Week 8

- Shared Journal Week 9

- Shared Journal Week 10

- Shared Journal Week 11

- Shared Journal Week 12

- Shared Journal Week 14

- Shared Journal Week 15