Hhinsch Week 3

Contents

- 1 Hhinsch Week 3 Individual Journal Entry

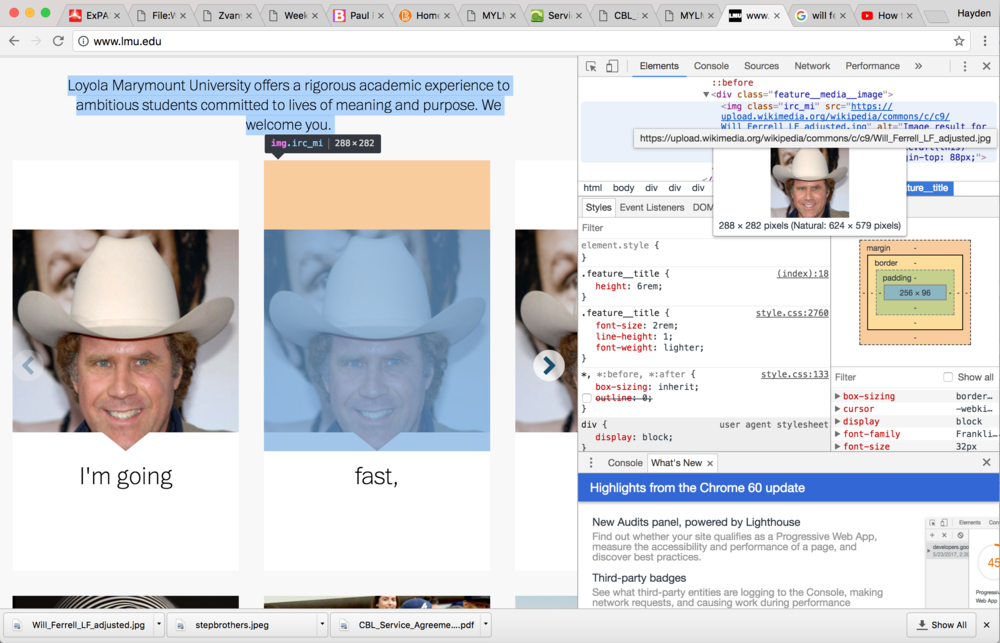

- 1.1 Screen Shot of Text and Image 'Hack' With Developer Tools Open

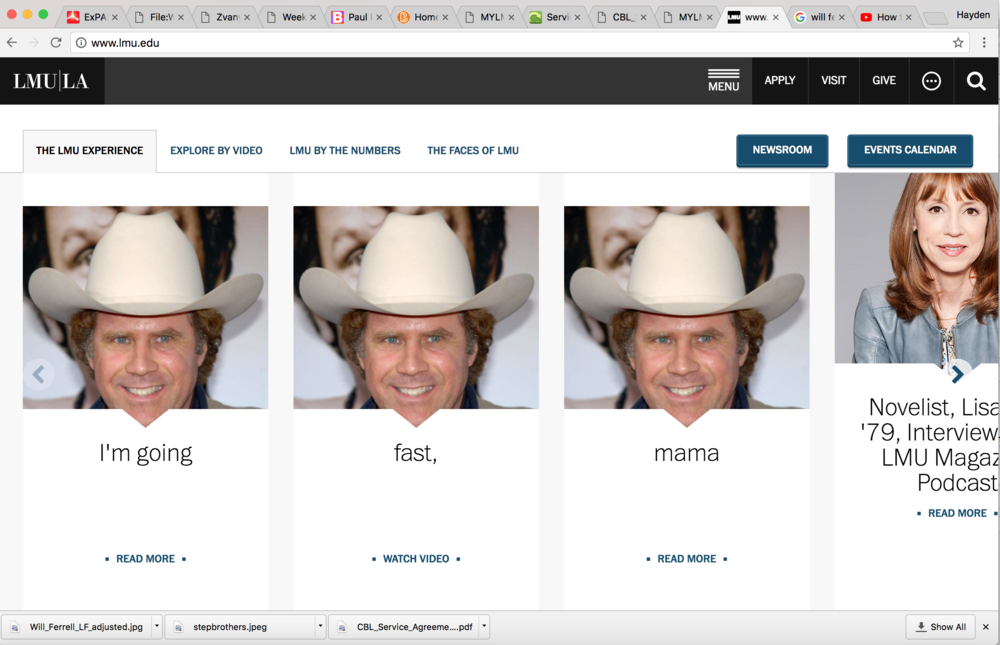

- 1.2 Screen Shot of Text and Image 'Hack' With Developer Tools Closed

- 1.3 The curl command that requests a translation at the command-line, raw-data level

- 1.4 Answers to the two questions regarding the ExPASy translation server’s output

- 1.5 The sequence of commands that extracts “just the answers” from the raw-data response

- 1.6 Electronic Notebook

- 1.7 Acknowledgements

- 1.8 References

- 1.9 Assignments

- 1.10 Hayden's Individual Journal Entries

- 1.11 Class Journal Entries

- 1.12 Electronic Notebook

- 1.13 Hayden's User Page

Hhinsch Week 3 Individual Journal Entry

Screen Shot of Text and Image 'Hack' With Developer Tools Open

Screen Shot of Text and Image 'Hack' With Developer Tools Closed

The curl command that requests a translation at the command-line, raw-data level

curl -d "submit=Submit&pre_text=actgcttcggtacagtcagatcgactacgaccccattcagtc" http://web.expasy.org/cgi-bin/translate/dna_aa

Answers to the two questions regarding the ExPASy translation server’s output

- Yes there are links to other pages within the ExPASy translation server's responses.

/css/sib_css/sib.css/css/sib_css/sib_print.cssand/css/base.cssare some of the links to other pages within the ExPASy translation server which take care of the visual aspect of the page. There are some other links to other servers that complete different operations. - I noticed a few identifiers:

- id='sib_top'

- id='sib_container'

- id='sib_header_small'

- id="sib_expasy_logo"

- id="sib_link"

- id="expasy_link"

- id='resource_header'

- id='sib_header_nav'

- d='sib_body'

- id = 'sib_footer'

- id = 'sib_footer_content'

- id = "sib_footer_right"

- id = "sib_footer_gototop"

These serve as small descriptions of what is being identified, for example: id="sib_expasy_logo" is identifying the eXPASy logo.

The sequence of commands that extracts “just the answers” from the raw-data response

curl "http://web.expasy.org/cgi-bin/translate/dna_aa?pre_text=cgatggtacatggagtccagtagccgtagtgatgagatcgatgagctagc&output=Verbose&code=Standard" | grep -E '<(BR|PRE)>' | sed 's/<[^>]*>//g'

Electronic Notebook

Acknowledgements

- I worked with both Eddie Bachoura and Zachary Van Ysseldyk in person and over text to collaborate and come up with my answers.

- Zachary Van Ysseldyk helped me after class to utilize the sed and grep commands in order to eliminate the unwanted code.

- While I worked with the people noted above, this individual journal entry was completed by me and not copied from another source.Hhinsch (talk) 22:30, 19 September 2017 (PDT)

References

- I used the Dynamic Text Processing page to get a firmer grasp on using sed.

- I used The Web from the Command Line page to get a firmer grasp on curl.

- I used this web developer site(

https://www.w3schools.com/tags/tag_div.asp) to find identifiers in the code. - I used the Week 3 assignment page:

https://xmlpipedb.cs.lmu.edu/biodb/fall2017/index.php/Week_3multiple times to clarify the questions being asked and to access the pages mentioned above.

Assignments

Week 1

Week 2

Week 3

Week 4

Week 5

Week 6

Week 7

Week 8

Week 9

Week 10

Week 11

Week 12

Week 14

Week 15

Hayden's Individual Journal Entries

hhinsch Week 1

hhinsch Week 2

hhinsch Week 3

hhinsch Week 4

hhinsch Week 5

hhinsch Week 6

hhinsch Week 7

hhinsch Week 8

hhinsch Week 9

hhinsch Week 10

hhinsch Week 11

hhinsch Week 12

hhinsch Week 14

hhinsch Week 15

Page Desiigner Deliverables Page

Class Journal Entries

Class Journal Week 1

Class Journal Week 2

Class Journal Week 3

Class Journal Week 4

Class Journal Week 5

Class Journal Week 6

Class Journal Week 7

Class Journal Week 8

Class Journal Week 9

Class Journal Week 10

Page Desiigner