Difference between revisions of "Mavila9 Week 8"

(→Methods & Results: methods) |

(→Data: image) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

== Purpose == | == Purpose == | ||

| − | This investigation was done to analyze the data | + | This investigation was done to analyze the microarray data to determine changes in gene expression after a cold shock. |

== Methods & Results == | == Methods & Results == | ||

==== ANOVA: Part 1 ==== | ==== ANOVA: Part 1 ==== | ||

| − | |||

| − | |||

# A new worksheet was created named "wt_ANOVA". | # A new worksheet was created named "wt_ANOVA". | ||

# The first three columns containing the "MasterIndex", "ID", and "Standard Name" were copied from the "Master_Sheet" worksheet for the wt strain and pasted it into the "wt_ANOVA" worksheet. The columns containing the data for the wt strain were copied and pasted into the "wt_ANOVA" worksheet. | # The first three columns containing the "MasterIndex", "ID", and "Standard Name" were copied from the "Master_Sheet" worksheet for the wt strain and pasted it into the "wt_ANOVA" worksheet. The columns containing the data for the wt strain were copied and pasted into the "wt_ANOVA" worksheet. | ||

# At the top of the first column to the right of the data, five column headers were created of the form wt_AvgLogFC_(TIME) where (TIME) was 15, 30, etc. | # At the top of the first column to the right of the data, five column headers were created of the form wt_AvgLogFC_(TIME) where (TIME) was 15, 30, etc. | ||

| − | # In the cell below the wt_AvgLogFC_t15 header, <code>=AVERAGE(</code> | + | # In the cell below the wt_AvgLogFC_t15 header, <code>=AVERAGE(</code> was typed. |

# Then all the data in row 2 associated with t15 was highlighted, and the closing parentheses key was pressed, followed by the "enter" key. | # Then all the data in row 2 associated with t15 was highlighted, and the closing parentheses key was pressed, followed by the "enter" key. | ||

# The equation in this cell was copied to the entire column of the 6188 other genes. | # The equation in this cell was copied to the entire column of the 6188 other genes. | ||

| Line 21: | Line 19: | ||

# In the first cell below this header, <code>=SUMSQ(</code> was typed. | # In the first cell below this header, <code>=SUMSQ(</code> was typed. | ||

# Then all the LogFC data in row 2 (but not the AvgLogFC) were highlighted, then the closing parentheses were added,and the "enter" key was pressed. | # Then all the LogFC data in row 2 (but not the AvgLogFC) were highlighted, then the closing parentheses were added,and the "enter" key was pressed. | ||

| − | # In the next empty column to the right of | + | # In the next empty column to the right of wt_ss_HO, the column headers wt_ss_(TIME) was created as in (3). |

| − | # Make a note of how many data points you have at each time point for your strain | + | # Make a note of how many data points you have at each time point for your strain. |

| − | # In the first cell below the header | + | # In the first cell below the header wt_ss_t15, <code>=SUMSQ(<range of cells for logFC_t15>)-COUNTA(<range of cells for logFC_t15>)*<AvgLogFC_t15>^2</code> was typed and the enter was pressed. |

| − | + | #* The phrase <range of cells for logFC_t15> was replaced by the data range associated with t15. | |

| − | #* The phrase <range of cells for logFC_t15> | + | #* The phrase <AvgLogFC_t15> was replaced by the cell number in which the AvgLogFC for t15 was computed. |

| − | #* The phrase <AvgLogFC_t15> | + | #* Upon completion of this single computation, the formula was copied throughout the column. |

| − | #* Upon completion of this single computation, | + | # This computation was repeated for the t30 through t120 data points. |

| − | # | + | # In the first column to the right of wt_ss_t120, the column header wt_SS_full was created. |

| − | # In the first column to the right of | + | # In the first row below this header, <code>=sum(<range of cells containing "ss" for each timepoint>)</code> was typed and enter was pressed. |

| − | # In the first row below this header, | + | # In the next two columns to the right, the headers (STRAIN)_Fstat and (STRAIN)_p-value were created. |

| − | # In the next two columns to the right, | + | # Recall the number of data points from (12): that total was called n. |

| − | # Recall the number of data points from ( | + | # In the first cell of the (STRAIN)_Fstat column, <code>=((n-5)/5)*(<(STRAIN)_ss_HO>-<(STRAIN)_SS_full>)/<(STRAIN)_SS_full></code> was typed and enter was pressed. |

| − | # In the first cell of the (STRAIN)_Fstat column, | + | #* The phrase wt_ss_HO was replaced with the cell designation. |

| − | #* | + | #* The phrase <wt_SS_full> was replaced with the cell designation. |

| − | + | #* The whole column was copied. | |

| − | #* | + | # In the first cell below the wt_p-value header, <code>=FDIST(<wt_Fstat>,5,n-5)</code> was typed, replacing the phrase <wt_Fstat> with the cell designation and the "n" as in (12) with the number of data points total. The whole column was copied. |

| − | #* | + | # A sanity check was performed before moving on to see if all of these computations were done correctly. |

| − | # In the first cell below the | + | #* Cell A1 was selected then on the Data tab, the Filter icon was chosen. |

| − | # | + | #* The drop-down arrow on the wt_p-value column was clicked on, then "Number Filters" was selected. In the window that appears, a criterion that will filter the data so that the p value has to be less than 0.05 was selected. |

| − | #* | + | #* Excel then only displayed the rows that correspond to data meeting that filtering criterion. A number appeared in the lower left hand corner of the window giving the number of rows that meet that criterion. |

| − | #* | + | #* Any filters that were applied were removed before making any additional calculations. |

| − | #* Excel | ||

| − | #* | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | ==== | + | ==== Calculating the Bonferroni and p-value Correction ==== |

| − | # | + | # The next two columns to the right were labeled with the same label, wt_Bonferroni_p-value. |

| − | + | # The equation <code>=<wt_p-value>*6189</code> was typed then copied into the entire column. | |

| − | + | # Any corrected p-values that were greater than 1 were corrected by typing the following formula into the first cell below the second wt_Bonferroni_p-value header: <code>=IF(wt_Bonferroni_p-value>1,1,wt_Bonferroni_p-value)</code>, where "wt_Bonferroni_p-value" refers to the cell in which the first Bonferroni p-value computation was made then the formula was copied throughout the column. | |

| − | |||

| − | |||

| − | # | ||

| − | # | ||

| − | |||

| − | |||

| − | |||

| − | + | ==== Calculating the Benjamini & Hochberg p value Correction ==== | |

| − | + | # A new worksheet named "wt_ANOVA_B-H" was created. | |

| + | # The "MasterIndex", "ID", and "Standard Name" columns were copied and pasted from the previous worksheet into the first two columns of the new worksheet. | ||

| + | # The unadjusted p-values were copied from your ANOVA worksheet and pasted into the Column D. | ||

| + | # Columns A, B, C, and D were selected and sorted by ascending values on Column D. The sort button was clicked on the toolbar, and in the window that appears column D was sorted from smallest to largest. | ||

| + | # "Rank" was typed in the cell E1. "1" was typed into cell E2 and "2" into cell E3. Both cells E2 and E3 were selected then the plus sign in the lower right-corner was double-clicked to fill the column with a series of numbers from 1 to 6189. | ||

| + | # The Benjamini and Hochberg p-value correction was calculated next. "wt_B-H_p-value" was typed into cell F1. The following formula was typed into cell F2: <code>=(D2*6189)/E2</code> and enter was press. That equation was copied throughout the entire column. | ||

| + | # "STRAIN_B-H_p-value" was typed into cell G1. | ||

| + | # The following formula was typed into cell G2: <code>=IF(F2>1,1,F2)</code> and enter was press. That equation was copied throughout the entire column. | ||

| + | # Columns A through G were selected then sorted by the MasterIndex in Column A in ascending order. | ||

| + | # Column G was copied and the values were pasted into the next column on the right of your ANOVA sheet. | ||

| + | # The .xlsx file was put into .zip folder and uploaded to the wiki. | ||

==== Sanity Check: Number of genes significantly changed ==== | ==== Sanity Check: Number of genes significantly changed ==== | ||

| Line 73: | Line 65: | ||

Before we move on to further analysis of the data, we want to perform a more extensive sanity check to make sure that we performed our data analysis correctly. We are going to find out the number of genes that are significantly changed at various p value cut-offs. | Before we move on to further analysis of the data, we want to perform a more extensive sanity check to make sure that we performed our data analysis correctly. We are going to find out the number of genes that are significantly changed at various p value cut-offs. | ||

| − | * | + | * From the wt_ANOVA worksheet, row 1 was selected, then the menu item Data > Filter > Autofilter. |

| − | + | * The drop-down arrow for the unadjusted p-value was selected and a criterion to the data so that the p-value has to be less than 0.05 was added to determine: How many genes have p < 0.05? and what is the percentage (out of 6189)?; how many genes have p < 0.01? and what is the percentage (out of 6189)?; how many genes have p < 0.001? and what is the percentage (out of 6189)?; and how many genes have p < 0.0001? and what is the percentage (out of 6189)? | |

| − | * | + | * To see the relationship of the less stringent Benjamini-Hochberg correction the data was filtered to determine: how many genes are p < 0.05 for the Bonferroni-corrected p value? and what is the percentage (out of 6189)? & how many genes are p < 0.05 for the Benjamini and Hochberg-corrected p value? and what is the percentage (out of 6189)? |

| − | + | * The numbers were organized as a table using this [[Media:BIOL367_F19_sample_p-value_slide.pptx | sample PowerPoint slide]] then compared to other strains after the slide was uploaded to the wiki. | |

| − | + | * Comparing results with known data: the expression of the gene NSR1 (ID: YGR159C) is known to be induced by cold shock, so the unadjusted, Bonferroni-corrected, and B-H-corrected p values were found of this gene, along with it's average Log fold change at each of the timepoints in the experiment to determine whether ''NSR1'' changed expression due to cold shock. | |

| − | + | * The unadjusted, Bonferroni-corrected, and B-H-corrected p values for the gene YBR160W/CDC28 in the dataset were found along with the average Log fold change at each of the timepoints in the experimentto determine whether gene expression changed due to cold shock. | |

| − | |||

| − | * | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | * | ||

| − | |||

| − | |||

| − | * Comparing results with known data: | ||

| − | * | ||

==== Clustering and GO Term Enrichment with stem (part 2)==== | ==== Clustering and GO Term Enrichment with stem (part 2)==== | ||

| − | # | + | # The microarray data file was prepared for loading into STEM. |

| − | #* | + | #* A new worksheet was inserted into the Excel workbook named "wt_stem". |

| − | #* | + | #* All of the data from the "wt_ANOVA" worksheet was selected, copied, and pasted into the "wt_stem" worksheet. |

| − | #** | + | #** The leftmost column with the column header "Master_Index" was renamed to "SPOT". Column B with header "ID" was renamed to "Gene Symbol". Then the the column named "Standard_Name" was deleted. |

| − | #** | + | #** The data on the B-H corrected p-value was filtered to be > 0.05. |

| − | #*** Once the data | + | #*** Once the data was filtered, all of the rows (except for your header row) were selected and deleted by right-clicking and choosing "Delete Row" from the context menu. The filter was undone to ensure that only the genes with a "significant" change in expression, and not the noise, will be used. |

| − | #** | + | #** All of the data columns except for the Average Log Fold change columns for each timepoint (for example, wt_AvgLogFC_t15, etc.) were deleted. |

| − | #** | + | #** The data columns were renamed with just the time and units (for example, 15m, 30m, etc.). |

| − | #** | + | #** The work was saved using ''Save As'' to save this spreadsheet as Text (Tab-delimited) (*.txt). |

| − | # | + | # Next the STEM software was downloaded and extracted from [http://www.cs.cmu.edu/~jernst/stem/ the STEM web site]. |

| − | + | #* The [http://www.sb.cs.cmu.edu/stem/stem.zip download link] was selected and the <code>stem.zip</code> file was downloaded to Desktop. | |

| − | #* | + | #* The file was unzipped creating a folder called <code>stem</code>. |

| − | #* | + | #** Next the Gene Ontology ([https://lmu.box.com/s/t8i5s1z1munrcfxzzs7nv7q2edsktxgl "gene_ontology.obo"]) and yeast GO annotations ([https://lmu.box.com/s/zlr1s8fjogfssa1wl59d5shyybtm1d49 "gene_association.sgd.gz"]) were downloaded and placed in the <code>stem</code> folder. |

| − | + | #*Inside the folder, the <code>stem.jar</code> was double-clicked to launch the STEM program. | |

| − | #** | ||

| − | |||

| − | |||

| − | #*Inside the folder, | ||

# '''Running STEM''' | # '''Running STEM''' | ||

| − | ## In section 1 (Expression Data Info) of the the main STEM interface window, | + | ## In section 1 (Expression Data Info) of the the main STEM interface window, the ''Browse...'' button was clicked and the text file created was selected. |

| − | ##* | + | ##* The radio button ''No normalization/add 0'' was selected. |

| − | ##* | + | ##* The box next to ''Spot IDs included in the data file'' was checked. |

| − | ## In section 2 (Gene Info) of the main STEM interface window, | + | ## In section 2 (Gene Info) of the main STEM interface window, the default selection for the three drop-down menu selections for Gene Annotation Source, Cross Reference Source, and Gene Location Source were left as "User provided". |

| − | ## | + | ## The "Browse..." button to the right of the "Gene Annotation File" item was clicked and the "gene_association.sgd.gz" was selected and opened. |

| − | ## In section 3 (Options) of the main STEM interface window, | + | ## In section 3 (Options) of the main STEM interface window, the Clustering Method "STEM Clustering Method" was selected. |

| − | ## In section 4 (Execute) | + | ## In section 4 (Execute) the yellow Execute button was selected to run STEM. |

| − | |||

* The sanity check in step 22 of "Statistical Analysis Part 1: ANOVA" showed that 2528 out of the 6189 samples have a p-value of less than 0.05 | * The sanity check in step 22 of "Statistical Analysis Part 1: ANOVA" showed that 2528 out of the 6189 samples have a p-value of less than 0.05 | ||

| Line 125: | Line 101: | ||

== Data == | == Data == | ||

| − | [[media:BIOL367 F19 microarray-data wtMAVILA9 Benjamini&Hochberg Pvalue Correction.zip | Microarray Data]] | + | [[media:BIOL367 F19 microarray-data wtMAVILA9 Benjamini&Hochberg Pvalue Correction.zip | Microarray Data .zip]] |

| + | |||

| + | [[media:BIOL367 F19 microarray-data wtMAVILA9 Benjamini^0Hochberg Pvalue Correction.txt | Microarray Data .txt]] | ||

| + | |||

| + | [[media:BIOL367 F19 wt p-value slide mavila9.zip | Sanity Check Table]] | ||

| + | |||

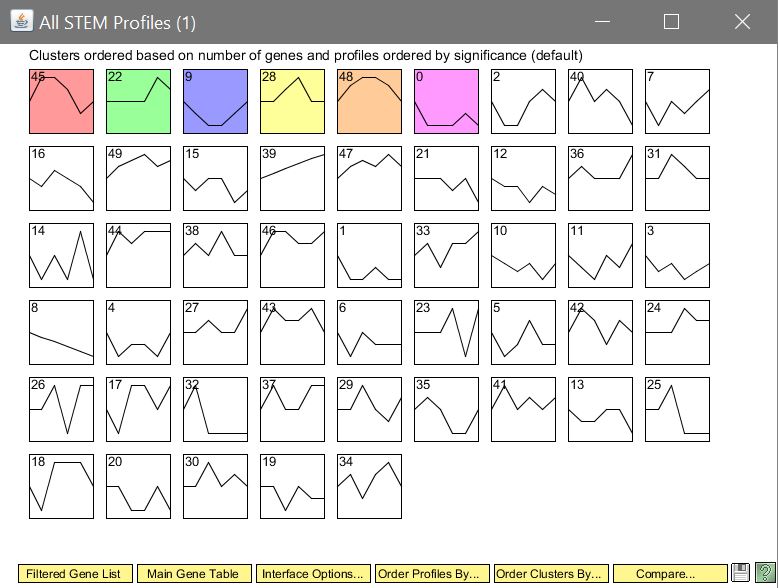

| + | [[image:Stem results1.JPG]] | ||

== Conclusion == | == Conclusion == | ||

| − | In conclusion, the wild type p-values were calculated for each gene allowing statistical significance to be determined. Next Bonferroni and Benjamini & Hochberg p-value adjustments were done to correct for the multiple testing problem. | + | In conclusion, the wild type p-values were calculated for each gene allowing statistical significance to be determined. Next Bonferroni and Benjamini & Hochberg p-value adjustments were done to correct for the multiple testing problem. The microarray data was prepared to be run on the STEM program. |

== Acknowledgements == | == Acknowledgements == | ||

Latest revision as of 14:27, 24 October 2019

Links

Purpose

This investigation was done to analyze the microarray data to determine changes in gene expression after a cold shock.

Methods & Results

ANOVA: Part 1

- A new worksheet was created named "wt_ANOVA".

- The first three columns containing the "MasterIndex", "ID", and "Standard Name" were copied from the "Master_Sheet" worksheet for the wt strain and pasted it into the "wt_ANOVA" worksheet. The columns containing the data for the wt strain were copied and pasted into the "wt_ANOVA" worksheet.

- At the top of the first column to the right of the data, five column headers were created of the form wt_AvgLogFC_(TIME) where (TIME) was 15, 30, etc.

- In the cell below the wt_AvgLogFC_t15 header,

=AVERAGE(was typed. - Then all the data in row 2 associated with t15 was highlighted, and the closing parentheses key was pressed, followed by the "enter" key.

- The equation in this cell was copied to the entire column of the 6188 other genes.

- Steps (3) through (6) were repeated with the t30, t60, t90, and the t120 data.

- Next in the first empty column to the right of the wt_AvgLogFC_t120 calculation, the column header wt_ss_HO was created.

- In the first cell below this header,

=SUMSQ(was typed. - Then all the LogFC data in row 2 (but not the AvgLogFC) were highlighted, then the closing parentheses were added,and the "enter" key was pressed.

- In the next empty column to the right of wt_ss_HO, the column headers wt_ss_(TIME) was created as in (3).

- Make a note of how many data points you have at each time point for your strain.

- In the first cell below the header wt_ss_t15,

=SUMSQ(<range of cells for logFC_t15>)-COUNTA(<range of cells for logFC_t15>)*<AvgLogFC_t15>^2was typed and the enter was pressed.- The phrase <range of cells for logFC_t15> was replaced by the data range associated with t15.

- The phrase <AvgLogFC_t15> was replaced by the cell number in which the AvgLogFC for t15 was computed.

- Upon completion of this single computation, the formula was copied throughout the column.

- This computation was repeated for the t30 through t120 data points.

- In the first column to the right of wt_ss_t120, the column header wt_SS_full was created.

- In the first row below this header,

=sum(<range of cells containing "ss" for each timepoint>)was typed and enter was pressed. - In the next two columns to the right, the headers (STRAIN)_Fstat and (STRAIN)_p-value were created.

- Recall the number of data points from (12): that total was called n.

- In the first cell of the (STRAIN)_Fstat column,

=((n-5)/5)*(<(STRAIN)_ss_HO>-<(STRAIN)_SS_full>)/<(STRAIN)_SS_full>was typed and enter was pressed.- The phrase wt_ss_HO was replaced with the cell designation.

- The phrase <wt_SS_full> was replaced with the cell designation.

- The whole column was copied.

- In the first cell below the wt_p-value header,

=FDIST(<wt_Fstat>,5,n-5)was typed, replacing the phrase <wt_Fstat> with the cell designation and the "n" as in (12) with the number of data points total. The whole column was copied. - A sanity check was performed before moving on to see if all of these computations were done correctly.

- Cell A1 was selected then on the Data tab, the Filter icon was chosen.

- The drop-down arrow on the wt_p-value column was clicked on, then "Number Filters" was selected. In the window that appears, a criterion that will filter the data so that the p value has to be less than 0.05 was selected.

- Excel then only displayed the rows that correspond to data meeting that filtering criterion. A number appeared in the lower left hand corner of the window giving the number of rows that meet that criterion.

- Any filters that were applied were removed before making any additional calculations.

Calculating the Bonferroni and p-value Correction

- The next two columns to the right were labeled with the same label, wt_Bonferroni_p-value.

- The equation

=<wt_p-value>*6189was typed then copied into the entire column. - Any corrected p-values that were greater than 1 were corrected by typing the following formula into the first cell below the second wt_Bonferroni_p-value header:

=IF(wt_Bonferroni_p-value>1,1,wt_Bonferroni_p-value), where "wt_Bonferroni_p-value" refers to the cell in which the first Bonferroni p-value computation was made then the formula was copied throughout the column.

Calculating the Benjamini & Hochberg p value Correction

- A new worksheet named "wt_ANOVA_B-H" was created.

- The "MasterIndex", "ID", and "Standard Name" columns were copied and pasted from the previous worksheet into the first two columns of the new worksheet.

- The unadjusted p-values were copied from your ANOVA worksheet and pasted into the Column D.

- Columns A, B, C, and D were selected and sorted by ascending values on Column D. The sort button was clicked on the toolbar, and in the window that appears column D was sorted from smallest to largest.

- "Rank" was typed in the cell E1. "1" was typed into cell E2 and "2" into cell E3. Both cells E2 and E3 were selected then the plus sign in the lower right-corner was double-clicked to fill the column with a series of numbers from 1 to 6189.

- The Benjamini and Hochberg p-value correction was calculated next. "wt_B-H_p-value" was typed into cell F1. The following formula was typed into cell F2:

=(D2*6189)/E2and enter was press. That equation was copied throughout the entire column. - "STRAIN_B-H_p-value" was typed into cell G1.

- The following formula was typed into cell G2:

=IF(F2>1,1,F2)and enter was press. That equation was copied throughout the entire column. - Columns A through G were selected then sorted by the MasterIndex in Column A in ascending order.

- Column G was copied and the values were pasted into the next column on the right of your ANOVA sheet.

- The .xlsx file was put into .zip folder and uploaded to the wiki.

Sanity Check: Number of genes significantly changed

Before we move on to further analysis of the data, we want to perform a more extensive sanity check to make sure that we performed our data analysis correctly. We are going to find out the number of genes that are significantly changed at various p value cut-offs.

- From the wt_ANOVA worksheet, row 1 was selected, then the menu item Data > Filter > Autofilter.

- The drop-down arrow for the unadjusted p-value was selected and a criterion to the data so that the p-value has to be less than 0.05 was added to determine: How many genes have p < 0.05? and what is the percentage (out of 6189)?; how many genes have p < 0.01? and what is the percentage (out of 6189)?; how many genes have p < 0.001? and what is the percentage (out of 6189)?; and how many genes have p < 0.0001? and what is the percentage (out of 6189)?

- To see the relationship of the less stringent Benjamini-Hochberg correction the data was filtered to determine: how many genes are p < 0.05 for the Bonferroni-corrected p value? and what is the percentage (out of 6189)? & how many genes are p < 0.05 for the Benjamini and Hochberg-corrected p value? and what is the percentage (out of 6189)?

- The numbers were organized as a table using this sample PowerPoint slide then compared to other strains after the slide was uploaded to the wiki.

- Comparing results with known data: the expression of the gene NSR1 (ID: YGR159C) is known to be induced by cold shock, so the unadjusted, Bonferroni-corrected, and B-H-corrected p values were found of this gene, along with it's average Log fold change at each of the timepoints in the experiment to determine whether NSR1 changed expression due to cold shock.

- The unadjusted, Bonferroni-corrected, and B-H-corrected p values for the gene YBR160W/CDC28 in the dataset were found along with the average Log fold change at each of the timepoints in the experimentto determine whether gene expression changed due to cold shock.

Clustering and GO Term Enrichment with stem (part 2)

- The microarray data file was prepared for loading into STEM.

- A new worksheet was inserted into the Excel workbook named "wt_stem".

- All of the data from the "wt_ANOVA" worksheet was selected, copied, and pasted into the "wt_stem" worksheet.

- The leftmost column with the column header "Master_Index" was renamed to "SPOT". Column B with header "ID" was renamed to "Gene Symbol". Then the the column named "Standard_Name" was deleted.

- The data on the B-H corrected p-value was filtered to be > 0.05.

- Once the data was filtered, all of the rows (except for your header row) were selected and deleted by right-clicking and choosing "Delete Row" from the context menu. The filter was undone to ensure that only the genes with a "significant" change in expression, and not the noise, will be used.

- All of the data columns except for the Average Log Fold change columns for each timepoint (for example, wt_AvgLogFC_t15, etc.) were deleted.

- The data columns were renamed with just the time and units (for example, 15m, 30m, etc.).

- The work was saved using Save As to save this spreadsheet as Text (Tab-delimited) (*.txt).

- Next the STEM software was downloaded and extracted from the STEM web site.

- The download link was selected and the

stem.zipfile was downloaded to Desktop. - The file was unzipped creating a folder called

stem.- Next the Gene Ontology ("gene_ontology.obo") and yeast GO annotations ("gene_association.sgd.gz") were downloaded and placed in the

stemfolder.

- Next the Gene Ontology ("gene_ontology.obo") and yeast GO annotations ("gene_association.sgd.gz") were downloaded and placed in the

- Inside the folder, the

stem.jarwas double-clicked to launch the STEM program.

- The download link was selected and the

- Running STEM

- In section 1 (Expression Data Info) of the the main STEM interface window, the Browse... button was clicked and the text file created was selected.

- The radio button No normalization/add 0 was selected.

- The box next to Spot IDs included in the data file was checked.

- In section 2 (Gene Info) of the main STEM interface window, the default selection for the three drop-down menu selections for Gene Annotation Source, Cross Reference Source, and Gene Location Source were left as "User provided".

- The "Browse..." button to the right of the "Gene Annotation File" item was clicked and the "gene_association.sgd.gz" was selected and opened.

- In section 3 (Options) of the main STEM interface window, the Clustering Method "STEM Clustering Method" was selected.

- In section 4 (Execute) the yellow Execute button was selected to run STEM.

- In section 1 (Expression Data Info) of the the main STEM interface window, the Browse... button was clicked and the text file created was selected.

- The sanity check in step 22 of "Statistical Analysis Part 1: ANOVA" showed that 2528 out of the 6189 samples have a p-value of less than 0.05

Data

Conclusion

In conclusion, the wild type p-values were calculated for each gene allowing statistical significance to be determined. Next Bonferroni and Benjamini & Hochberg p-value adjustments were done to correct for the multiple testing problem. The microarray data was prepared to be run on the STEM program.

Acknowledgements

I'd like to thank User:Knguye66, User:Jcowan4, and User:Cdomin12 for helping with this investigation. Except for what is noted above, this individual journal entry was completed by me and not copied from another source. Mavila9 (talk) 14:24, 20 October 2019 (PDT)

References

Week 8. Retrieved October 20, 2019, from https://xmlpipedb.cs.lmu.edu/biodb/fall2019/index.php/Week_8