Difference between revisions of "Mbalducc Week 3"

(added response to question #2) |

(→Notebook) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 22: | Line 22: | ||

#There are other links to other pages within the server's response: | #There are other links to other pages within the server's response: | ||

| − | *http://web.expasy.org/favicon.ico. This links to an icon used on the page. | + | #*http://web.expasy.org/favicon.ico. This links to an icon used on the page. |

| − | *http://web.expasy.org/css/sib_css/sib.css. This links to a page with the header "Swiss Institute of Bioinformatics". | + | #*http://web.expasy.org/css/sib_css/sib.css. This links to a page with the header "Swiss Institute of Bioinformatics". |

| − | *http://web.expasy.org/css/sib_css/sib_print.css. This links to another page from the "Swiss Institute of Bioinformatics". | + | #*http://web.expasy.org/css/sib_css/sib_print.css. This links to another page from the "Swiss Institute of Bioinformatics". |

| − | *http://web.expasy.org/css/base.css. This links to a page with the CSS for Genevian Resources. | + | #*http://web.expasy.org/css/base.css. This links to a page with the CSS for Genevian Resources. |

| − | *http://en.wikipedia.org/wiki/Open_reading_frame. This links to the Wikipedia page for "Open reading frame". | + | #*http://en.wikipedia.org/wiki/Open_reading_frame. This links to the Wikipedia page for "Open reading frame". |

#There are identifiers in the server's response: | #There are identifiers in the server's response: | ||

| − | *"id=sib_top" This identifies the top of the page. | + | #*"id=sib_top" This identifies the top of the page. |

| − | *"id=sib_container" This identifies the entirety of the space the page is contained within. | + | #*"id=sib_container" This identifies the entirety of the space the page is contained within. |

| − | *"id=sib_body" This identifies the body of the page, where the results from the translation are located. | + | #*"id=sib_body" This identifies the body of the page, where the results from the translation are located. |

| − | *"id=sib_footer" This identifies where the footer of the page is. | + | #*"id=sib_footer" This identifies where the footer of the page is. |

| + | === Just the Answers === | ||

| + | |||

| + | '''The code I used to extract just the answers from the translation was: | ||

| + | curl -d "pre_text=cgtatgctaataccatgttccgcgtataacccagccgccagttccgctggcggcatttta&submit=TRANSLATE SEQUENCE" http://web.expasy.org/cgi-bin/translate/dna_aa | sed "1,47d" | sed "s/<[^>]*>//g" | sed "14,50d" | ||

| + | |||

| + | = Notebook = | ||

| + | |||

| + | I started this week by working on the hacking a website section. I used LMU's apply page, and opened up the developer tools. I changed the words on screen and added a photo by uploading it to our wiki, and then by linking to it within the tools. | ||

| + | |||

| + | I made the curl code next, by figuring out that the "pre_text" needed to be the sequence of DNA and the action needed to be to submit the "TRANSLATE SEQUENCE" button. | ||

| + | |||

| + | Then, I looked at the developer tools for the response page, and found the links it contained, and the identifiers. | ||

| + | |||

| + | The last thing I did for this individual journal was make a sed code that would show only the answers. This was tricky, as I had trouble figuring out exactly the sequence I needed to get rid of the links within the lines that held what I wanted to be shown. | ||

= Acknowledgments = | = Acknowledgments = | ||

Latest revision as of 00:04, 19 September 2017

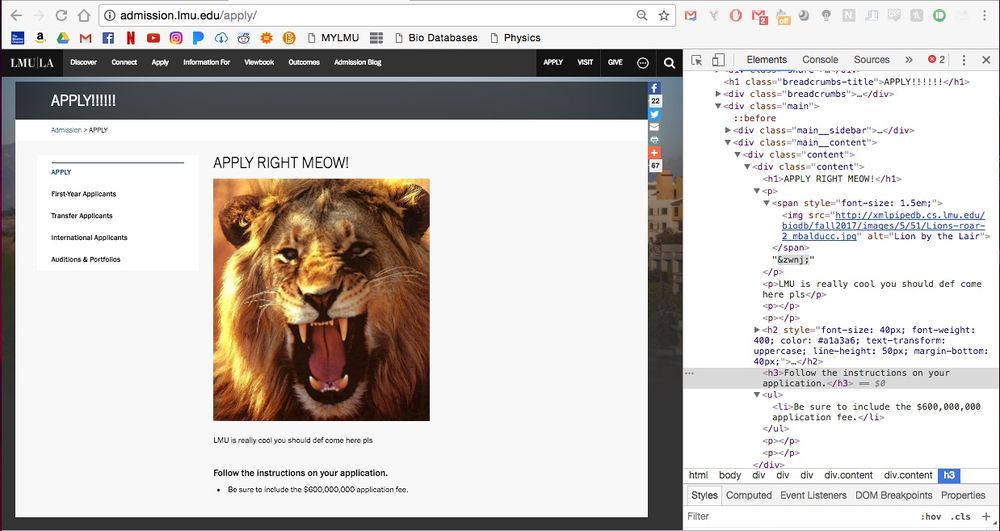

Hack-a-Page

Screenshots

LMU Apply Page With Developer Tools Open

LMU Apply Page Without Developer Tools Open

Curl Code

The code I used to translate the DNA sequence from the command line was:

curl -d "pre_text=cgtatgctaataccatgttccgcgtataacccagccgccagttccgctggcggcatttta&submit=TRANSLATE SEQUENCE" http://web.expasy.org/cgi-bin/translate/dna_aa

Studying the curl'ed code

- There are other links to other pages within the server's response:

- http://web.expasy.org/favicon.ico. This links to an icon used on the page.

- http://web.expasy.org/css/sib_css/sib.css. This links to a page with the header "Swiss Institute of Bioinformatics".

- http://web.expasy.org/css/sib_css/sib_print.css. This links to another page from the "Swiss Institute of Bioinformatics".

- http://web.expasy.org/css/base.css. This links to a page with the CSS for Genevian Resources.

- http://en.wikipedia.org/wiki/Open_reading_frame. This links to the Wikipedia page for "Open reading frame".

- There are identifiers in the server's response:

- "id=sib_top" This identifies the top of the page.

- "id=sib_container" This identifies the entirety of the space the page is contained within.

- "id=sib_body" This identifies the body of the page, where the results from the translation are located.

- "id=sib_footer" This identifies where the footer of the page is.

Just the Answers

The code I used to extract just the answers from the translation was:

curl -d "pre_text=cgtatgctaataccatgttccgcgtataacccagccgccagttccgctggcggcatttta&submit=TRANSLATE SEQUENCE" http://web.expasy.org/cgi-bin/translate/dna_aa | sed "1,47d" | sed "s/<[^>]*>//g" | sed "14,50d"

Notebook

I started this week by working on the hacking a website section. I used LMU's apply page, and opened up the developer tools. I changed the words on screen and added a photo by uploading it to our wiki, and then by linking to it within the tools.

I made the curl code next, by figuring out that the "pre_text" needed to be the sequence of DNA and the action needed to be to submit the "TRANSLATE SEQUENCE" button.

Then, I looked at the developer tools for the response page, and found the links it contained, and the identifiers.

The last thing I did for this individual journal was make a sed code that would show only the answers. This was tricky, as I had trouble figuring out exactly the sequence I needed to get rid of the links within the lines that held what I wanted to be shown.

Acknowledgments

I worked with my homework partner, Blair Hamilton on this assignment. We met after class to work on the "hack-a-page" section of the assignment together.

While I worked with the people noted above, this individual journal entry was completed by me and not copied from another source.

References

LMU BioDB 2017. (2017). Week 3. Retrieved September 14, 2017, from https://xmlpipedb.cs.lmu.edu/biodb/fall2017/index.php/Week_3

Palmo, Candace. n.d. Lion's Roar 2 [photograph]. Retrieved September 14, 2017, from https://candacepalmo.files.wordpress.com/2014/03/lions-roar-2.jpg

Other Pages

Individual Journals

No Assignment Week 13

Assignments

No Assignment Week 13